Business software is everywhere. You can’t even look down without noticing it: if you’re wearing a watch, listening to music or reading this post, business software has been running in the background! Even taking a walk outside puts you in contact with business software: smart street lights, city parking meters and traffic signals are all doing their jobs using business software (and a ton of electricity).

The history of today’s business software is a little bit sketchy because most of it didn’t exist 50 years ago. Business intelligence software wasn’t around until the 1960s when manufacturers used punch cards to track their inventory. Of course, there are newspaper ad-hoc applications that have ties back to the 1800s.

Much business software is developed to meet the needs of a specific business, and therefore is not easily transferable to a different business environment, unless its nature and operation are identical. Due to the unique requirements of each business, off-the-shelf software is unlikely to completely address a company’s needs. However, where an on-the-shelf solution is necessary, due to time or monetary considerations, some level of customization is likely to be required. Exceptions do exist, depending on the business in question, and thorough research is always required before committing to bespoke or off-the-shelf solutions.

Some business applications are interactive, i.e., they have a graphical user interface or user interface and users can query/modify/input data and view results instantaneously. They can also run reports instantaneously. Some business applications run in batch mode: they are set up to run based on a predetermined event/time and a business user does not need to initiate them or monitor them.

Some business applications are built in-house and some are bought from vendors (off the shelf software products). These business applications are installed on either desktops or big servers. Prior to the introduction of COBOL (a universal compiler) in 1965, businesses developed their own unique machine language. RCA’s language consisted of a 12-position instruction. For example, to read a record into memory, the first two digits would be the instruction (action) code. The next four positions of the instruction (an ‘A’ address) would be the exact leftmost memory location where you want the readable character to be placed. Four positions (a ‘B’ address) of the instruction would note the very rightmost memory location where you want the last character of the record to be located. A two-digit ‘B’ address also allows a modification of any instruction. Instruction codes and memory designations excluded the use of 8’s or 9’s. The first RCA business application was implemented in 1962 on a 4k RCA 301. The RCA 301, mid-frame 501, and large frame 601 began their marketing in early 1960.

In the early days of computing, businesses were running mainframes running software intended to speed up and cut costs on accounting work. One mainframe could do the job of 100 white-collar workers. This time, the late 50s and early 60s, saw some of the first instances of computers replacing jobs.

This all occurred in rooms very much like the following:

Environments like these set the precedent for building software, yet it’s important to notice the distinct lack of screens. A lot of the techniques and conventions born of this screen-less environment are still with us today.

The typewriter you see is connected to the mainframe and can be used to deliver instructions to the machine. In this environment, the only concern of the software is data in and data out.

To get to this point a software team is given a business case, the case is modelled down to the core steps and procedures, a specification is produced and then programmers start writing the software against the specification. “Given Y, do X then output A”. They keep going, ticking off features from the specification. Once all the features in the specification have a tick, the software is considered complete and will be delivered to the customer.

70s and 80s: Enter the GUI and the PC

Throughout the 1970’s Xerox was running a research programme to develop a graphical user interface known as Xerox Alto, a way to allow more nunanced interaction and visual feedback between computer and user. The Xerox Alto was the first computer to use the metaphor of the “desktop”, one of the first to make use of a mouse and the project is now often cited as being the forerunner of the modern GUI (Graphical User Interface) and heavily inspired Apple and Microsoft.

As we enter the 1980s, the decade of the Personal Computer, we start to see widespread use of screens. Gone are the typewriters from the staff desks and in comes small, general purpose computing machines. Running a handful of different programs at the whim of the user, spreadsheets and databases are now in the hands of the general user, not only the specialist.

Conventions

This new paradigm ushered in a small explosion in operating systems and interfaces. Initially all fairly different, over the decade patterns emerged and common ways of doing things became normalised.

The rise of the PC and increase in computing power meant those research programs of the 70s were now being drawn upon to inform software design – and how we would interact with software – for the next 30 years.

Program windows, input boxes, toolbars, dropdown menus and buttons were shared across the different platforms. These patterns became ingrained into users’ minds, they expected to see them and understood how they work.

This is pretty much where we stop. We now have our basic components that will dominate human computer interaction for the forseeable future, it’s these components that are going to be used and abused.

90s: Begin the Abuse

It’s 1995 and your business has grown, it’s time to have some bespoke software written to manage your orders, invoices and customers. So you begin looking for IT specialists and you find Really Good IT Inc, they’ve been around 10 years and have a good track record, they’ve worked with some big companies, so you hire them to do the work.

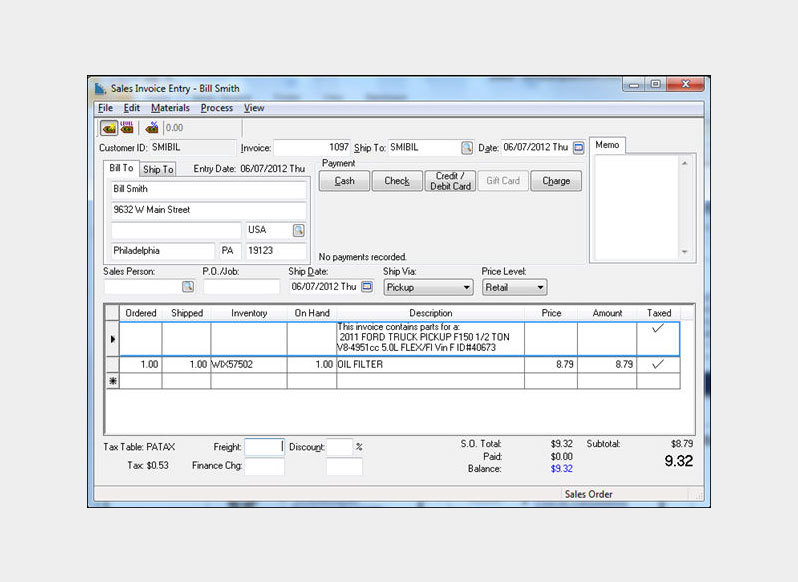

They come in, map out everything you need your software to do. You feel good, they’ve listened to your needs and seem like they know what they’re talking about. They go away for a few months and present you with something like this:

“Amazing!” you say, “it does everything we wanted it to!” and you now decide to deploy this across your business, you take a day out to train your staff and on Monday morning the new system is being used!

So what is the problem here? Really Good IT Inc fulfilled their contract and the customer is happy. Well, the problem is the customer had no expectations, they haven’t really used software before, so just the fact the computer is doing the job is enough to excite and please them. The UI (User Interface) is an afterthought, it exposes the agreed functionality but that is all it does. What this highlights is that even though the way we interact with software has changed, the way it is designed hasn’t. Sub optimal UI has an associated cognitive cost, as you need to scan over the whole to find the details, you have to slowly learn how to use it, there is no context or clues on how it should be used, it simply gives you all the options on the screen at once.

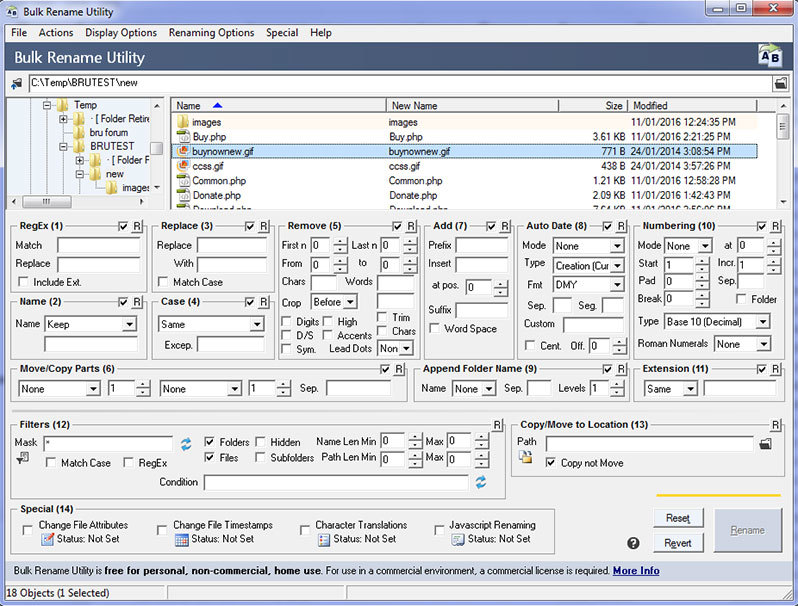

This demonstrates developers trying to be designers. It’s software, it’s on a computer, so logically you need a developer to make the UI don’t you? “I just need to expose the functionality to the user” is a common misconception held with developers and leads to interfaces like Bulk Rename for Windows:

There is a lot more to designing software than simply exposing functionality and features, the biggest hurdle is making sure a user’s mental model matches the actual program model, basically how a user expects a program to behave matches how the program actually behaves. The easiest way to do this is by sticking with known conventions, but that’s not enough, sticking to known conventions has lead us to where we are now, so what else is there? It’s all about guiding and setting those expectations from the get go, how you display a widget, how you breakdown a procedure and how you frame the context all sets expectations in users.

This understanding is now becoming normal in software. The web and mobile apps have reshaped our expectations of computing; it should be easy, it should make sense right away, I shouldn’t need a 300 page manual or full day training course. The problem here is that business and enterprise software has a tendency to stick around, and the companies who develop them haven’t necessarily caught up. Software is still in the realm of programmers and big lists of features.

So How Should We Approach User Interface Design?

Things are changing, businesses are expecting design to be as much a part of the software creation process as development is. Disciplines such as Human-Computer Interaction and User Experience Design have all matured and taken their seats at the table (HCI already being a decades old area of study). Designers who have never touched code now specialise in interface design.

Companies like Slack, Dropbox and MailChimp are developing software primarily used by businesses, yet they have put empathy and design thinking at the heart of their products, simply exposing functionality is not enough for them and it shouldn’t be for you.

Exploring the slack management interface feels intuitive, I’m not afraid I’ll break something, I don’t need a manual to be able to make the changes I want to, no one needs to train me because the interface trains me.

The biggest difference between a well designed piece of software and a not so well designed piece of software is showing a person only what they need when they need it, less clutter on the screen means you are not overwhelming the user, they begin building the mental models as they discover the functionality, and they discover it when they need it.

When you let the user learn as they go you avoid overwhelming them, they discover the settings and features only when they need them, and they are more likely to make memorable connections and build a fuller understanding of the software they’re using. This is a much more pleasant experience and the user associates simplicity and ease of use with your brand.

Conclusion:

The history of enterprise software begins with companies and the software they used to manage their businesses but evolved from applications that managed the IT infrastructure to applications that managed business processes, gathered all relevant information about a company’s customers, connected different departments together and supported the exchange of data between different companies.